Recently, I’ve been listening to lots of podcasts and reading twice as many articles about the shift made by tech companies from employing cheap labor to train AI models to using AI evaluations (or “evals”) to measure the quality, accuracy, and usefulness of an AI model’s output.

Evals are essentially usability tests or rubrics for the model itself. And…just like you wouldn’t launch a product without validating its interface, teams now won’t ship an AI feature without testing the model’s behavior.

Interestingly enough, evals are now so hot and sought after that the fastest growing company in history (at least according to its founder, Brendan Foody, and Lenny Rachitsky, the voice of Lenny’s Newsletter) is Mercor, which is more or less a job marketplace for experts in any field, who can help design evals for other companies. For instance, should a company want to build a super-accurate legal AI chatbot, they can hire lawyers on Mercor to help them write evals for their products.

From a design perspective, this is interesting for many (x10) reasons!

Firstly, and perhaps most importantly, the purpose of evals, at their core, is to improve the quality and accuracy of AI-derived answers to questions. This is part design and part engineering. And…it’s designers who care/think about the human side quality.

Secondly, evals are experiences writ large. And who better to shape experiences than designers?!

Finally, by bringing designers into the eval conversations, the conversations (and the evals) become more strategic. The best products aren’t the ones with the shiniest AI. They’re the ones that are most useful to customers.

Read on for a deep dive…

— Justin Lokitz

Design Deep Dive

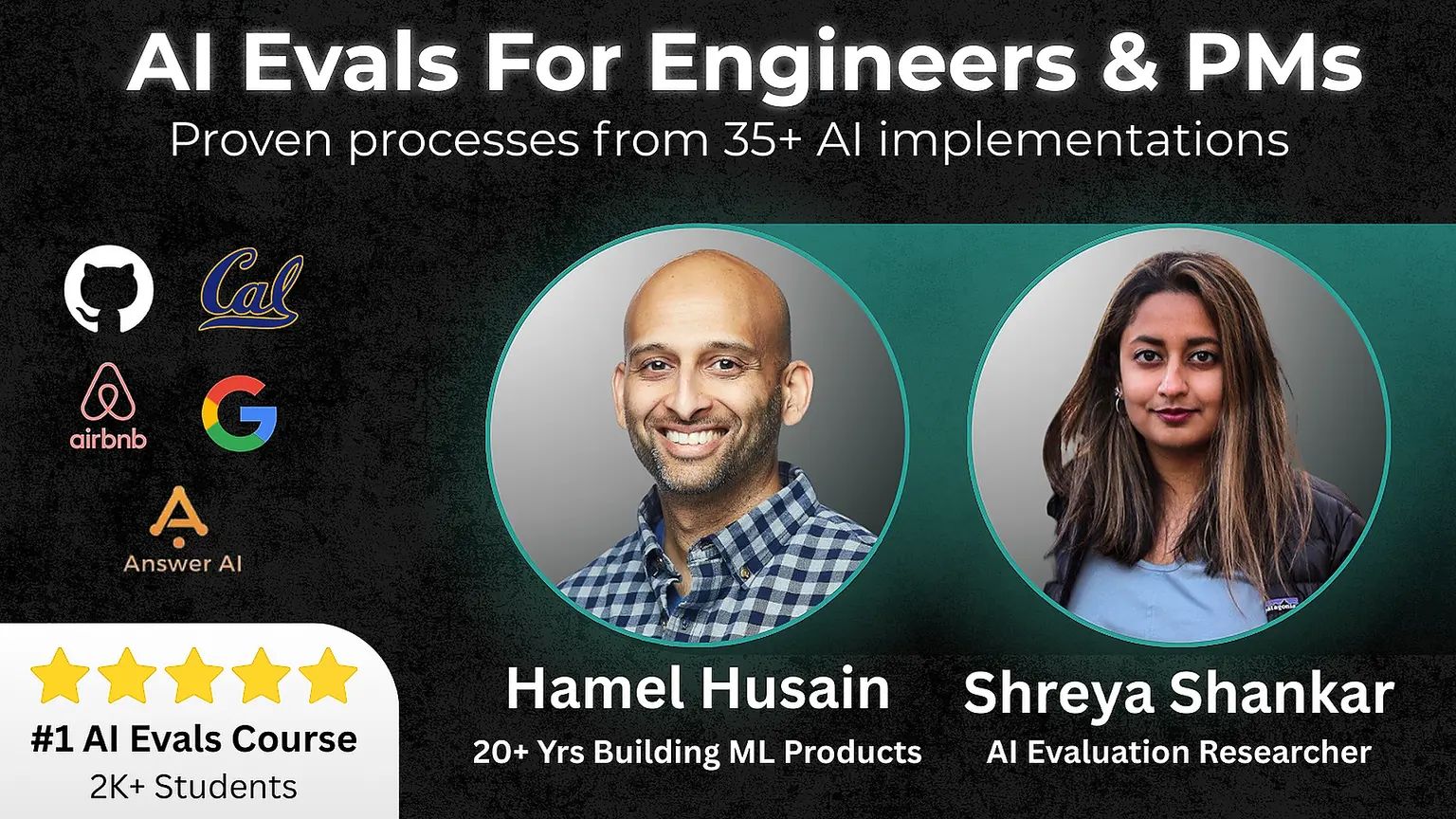

AI evals have become one of the most talked-about topics in product circles. Engineers are spinning up eval pipelines. Product managers are obsessing over benchmarks. Influencers like Hamel Husain, Shreya Shankar, and Brendan Foody are leading the conversation in newsletters, Slack groups, and meetups. Every startup with an AI feature now has some version of an evaluation stack.

What’s missing from most of these conversations, trainings, articles, etc. is…your guessed it: design! Not as an afterthought. Not as a UI polish team. As infrastructure.

Designers—and especially design leaders—have a critical role to play in how AI systems are evaluated, trusted, and ultimately adopted. It’s not a matter of opinion. It’s a structural shift.

As Rachel Kobetz so succinctly puts it…

With GenAI, design no longer lives at the surface, shaping only what customers see. It has become the architecture beneath how roadmaps are defined, how systems behave, and how companies compete.

The moment we’re in is more than a trend. It’s a threshold. And…as AI evals are about defining quality, no one is better suited to shape human-centered definitions of quality than designers.

This moment calls for more than polish. It calls for judgment. Here’s why….

1. Designers know how to define human-centered quality

Most product teams building with or for AI today begin with benchmark metrics, like latency, BLEU scores, token usage, and accuracy. These are helpful for model comparisons. But they say little about whether the model is actually useful in context.

Designers are already trained to ask better questions:

Is this response clear?

Does it match user intent?

Does it feel trustworthy?

Does it reduce friction or add it?

In a world where AI outputs change every time you tweak a prompt or reword an input, consistency, usefulness, and trust aren’t just UX goals; they’re product requirements. Full stop.

Designers have frameworks to measure those things. We’ve used them in user flows, experience maps, content hierarchies, and interaction design for decades. Now we need to apply them to the model’s behavior itself.

This is as much about accuracy as it is about creating and delivering real, lasting value for the customer…which is the point, isn’t it?

2. Evals are experiences, and designers should be shaping them

AI evals aren’t just metrics. They’re entire workflows.

Prompt writing, response scoring, human-in-the-loop ranking, error tagging—these tasks happen in interfaces that desperately need design attention.

Right now, most internal tools for AI evaluation are built for speed. But when you’re asking people to make judgment calls, like “Was this answer helpful? Is it harmful? Does it sound human?”, clarity and usability become critical.

Poorly designed eval tools lead to inconsistent results. Inconsistent results lead to faulty conclusions. That’s a design problem.

When designers step in, they don’t just clean up the UI. They reduce ambiguity. They make it easier to spot patterns. They help turn subjective input into useful signals. They scale good judgment.

If you’ve ever improved an internal workflow tool, you already know how to do this.

3. Designers make AI eval strategic, not just operational

Great AI products don’t win because they’re the most advanced. They win because they’re the most useful…and provide the most value.

Designers see the difference between novelty and value. Between technically correct and emotionally intelligent. Between functional and delightful.

When designers co-own AI evals, they surface problems early:

Where trust is eroding

Where outputs are overwhelming…or simply too open-ended

Where certain customer groups are consistently left out

They also help translate insights from eval into strategy, guiding roadmaps, feature priorities, and go/no-go decisions.

Moving from tactical execution to strategic design leadership isn’t just challenging — it’s transformative. It’s the difference between being part of the conversation and leading it, between delivering on today’s expectations and shaping tomorrow’s possibilities.

So what should designers do?

Start small. Ask your team how they evaluate AI behavior. Join a model quality review. Offer to prototype a better scorecard. Audit the current evaluation tooling for friction. Find ways to create value internally first!

Here are a few quick entry points:

Turn user feedback into evaluation criteria

Translate vague goals (“more helpful”) into judgment frameworks

Run research on how real people interpret AI responses

Help define what “good enough” means before launch

Every eval system is an opportunity for better design. And every decision about AI behavior is a decision about product experience. And…product experience is your job.

Bottom line

The next generation of AI products won’t be defined by model performance alone. They’ll be defined by whether those models work for people.

Evals are where that definition gets made.

And…designers are the ones who know how to define quality when the answers aren’t obvious.

This is your moment to step in—not as the last layer of polish, but as a co-architect of how AI behaves.

AI needs your judgment. Pull up a chair (or a standing desk)…and create value by design.

Subscribe to Design Shift for more conversations that help creative professionals grow into strategic leaders.

What did you think of this week's issue?