Call it a frequency illusion, the Baader–Meinhof phenomenon, or whatever you like, but over the course of the last few days, I’ve read or been part of more discussions about design’s role in culture and AI adoption than I have in months. And…it’s not me who’s bringing this to the table.

One of the key arguments being made is that AI adoption, while seemingly widespread, is beginning to sputter mostly because people don’t 1) know how to use the tools, and/or 2) don’t trust AI.

In fact, MIT’s State of AI in Business 2025 report confirms what we’ve been seeing across design-led teams: the biggest obstacles to AI success aren’t technical — they’re human. The study found that 95% of enterprise AI initiatives fail to deliver measurable value, largely because of cultural friction, poor trust, and low psychological safety. Meanwhile, employees are adopting “shadow AI” tools on their own, proving that curiosity, not compliance, drives real readiness. The report also highlights a widening “learning gap”: most systems can’t adapt or remember.

Of course, underneath every promise of productivity lies a quieter problem — fear, indifference, and cultures built on control rather than curiosity. As my good friend Nenuca Syquia reminds us in her work on AI readiness, real progress depends on shifting mindsets, not just upgrading software. Across the Design Shift community, we’ve seen it firsthand: Mario Ruiz calls it a data-trust issue, Kevin Flores frames it as a leadership gap, and Nick Cawthon sees it as a design opportunity.

The real question is NOT “How do we make AI smarter?” but “How do we make humans ready?” That’s the work of design (i.e., translating between fear and possibility, and building systems people actually want to trust)!

Read on for a deep dive…

— Justin Lokitz

Design Deep Dive

Why ethical AI adoption starts (and sometimes ends) with us

Let’s be honest: the hardest part about adopting AI isn’t the tech, in any way, shape, or form. It’s us. Yep! We humans are the challenge.

It’s not the models, not the metrics, not the latest tool stack. It’s the messy, emotional, deeply human part of the system: fear, pride, culture, incentives, trust. All of the things that make up a healthy design research diet.

As Nenuca Syquia, the CEO and founder of Better Organizations by Design, writes in her piece on AI readiness, thriving in the age of intelligent systems requires readiness across mindset, discernment, and culture. Most organizations check the technical box but skip the human one. The result? Fear creeps in, apathy sets in, and even the best AI initiatives stall before they start.

Across the Design Shift community — from my writing partner, Mario Ruiz on enterprise AI agents, Gregory Stock on listening, Kevin Flores on metrics and outcomes, to Nick Cawthon on AI-driven UX — a clear theme is emerging:

Designers are the translators between human trust and machine intelligence.

The Real Barrier Isn’t Technical, It’s Emotional

As you’ve no doubt heard about or perhaps witnessed in real life, whenever a new AI tool rolls out, reactions split fast. Some people dive in. Others freeze. Most quietly wait to see if it sticks.

Nenuca points out that indifference is the most underestimated obstacle. This is not open resistance complete with pitchforks and torches, but a kind of disengaged shrug. “If people don’t feel involved,” she writes, “they won’t feel responsible.”

This is where design earns its keep. A couple of weeks ago I wrote about something similar with regard to AI evaluations. In that article, I argue that evaluations are as much about designed experiences (that either build or erode trust) as they are about AI accuracy or latency. Designers can reframe those cold, technical checkpoints into human conversations that surface emotion: Does this make sense? Does it feel fair? Does it respect intent?

The simple act of acknowledging fear and curiosity as valid signals turns tension into trust. That’s not training. That’s design.

Culture Eats AI for Breakfast

While I have been using Peter Drucker’s oft-quoted statement, “culture eats strategy for breakfast,” in my presentations and lectures for years, I think this has never been truer than right now. The gist is this: you can’t code your way out of a bad culture.

I saw this on full display while facilitating a 14-week strategy activation sprint at MVP Healthcare. During the listening phase of the activation sprint, the team tasked with launching an AI initiative found that there were already many pockets within the organization where that very initiative was being tested. However, there was no conversation or cohesion. In other words, the challenge was not necessarily technology; it was about culture (and creating connections within that culture).

Nenuca outlines three traps that quietly kill AI adoption:

Mandates without meaning — “Use it” without “Here’s why.”

Punishment for experimentation — where failure equals incompetence.

Metrics that reward activity over outcomes — hours over impact.

If creativity is penalized and safety is rewarded, AI becomes a checkbox, not a catalyst.

Design leaders have leverage here. We can reframe AI as a way to elevate human work — to shift energy from repetitive tasks to creativity, storytelling, and systems thinking. As Kevin Flores reminds us, “Design’s value shows up in outcomes, not outputs.” When teams are measured by customer impact, not screen count, AI becomes an ally, not a threat.

From Utility to Delight

Like most designers and design leaders, I also wholeheartedly believe that delight is a differentiator.

AI is already making basic experiences uniform and frictionless. But frictionless isn’t memorable. As any game designer will tell you, overcoming some obstacle (i.e., friction) is what makes games (and most designs) memorable. When it comes to designing for AI, we designers and design leaders should aim higher — for clarity, story, and emotional resonance.

A culture obsessed with efficiency will always undervalue delight. Yet delight is what keeps humans in the loop. It’s what makes us trust the systems we build. When teams are rewarded for making people feel understood (not just for making workflows faster), the products that emerge will naturally carry more humanity.

Because in the end, delight is trust made visible.

Designing for Trust and Equity

Ethical AI is also about aligning systems with human values, like fairness, transparency, inclusivity, and sustainability. This isn’t checklist stuff. It must be designed.

Nenuca calls this discernment: seeing both the machine and its ripple effects. Designers have that lens. They’re trained to ask uncomfortable questions like Who benefits? Who gets left out?

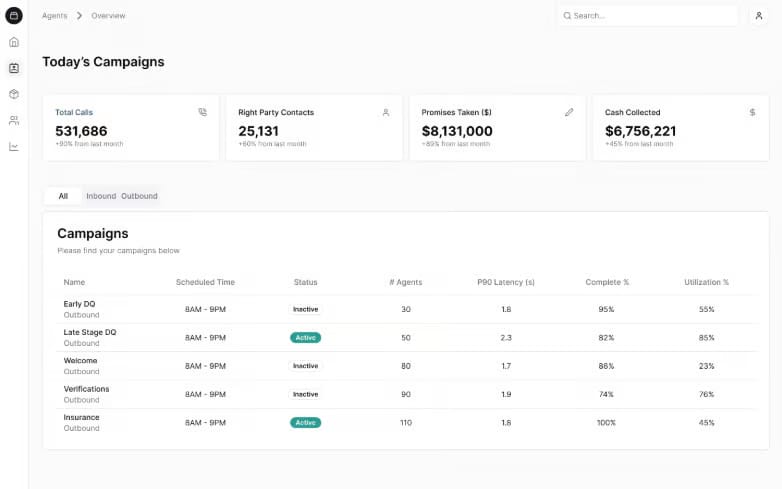

Mario’s work on enterprise AI agents reinforces this: if users can’t see where data comes from or how reasoning works, they won’t trust the results. Transparency isn’t just a technical feature. In fact, it’s hard to quantify. Yet, transparency is part of ethical design. Showing reasoning, confidence levels, or even sources creates accountability.

Salient AI Agents for loan servicing. Source: Salient

Equity sits right beside trust. Inclusive design isn’t charity, it’s Strategy (with a capital “S”)! Systems built for a narrow “default user” will amplify bias by default. Testing across roles, abilities, and contexts expands what “intelligent” even means. When we design for diversity, we design for resilience.

Experimentation Is the New Ethics

And…as everything is moving faster than ever in the age of AI, ethics can’t just live in policy docs; it lives in practice.

Nenuca advocates for sandbox sessions and open demos where people can play with AI tools safely. Nick Cawthon says the same thing in AI Meets UX: “Design’s value lies in direction, not deliverables.” Real learning comes from testing, breaking, and iterating — not just theorizing.

The best design-led teams we’ve seen treat experimentation like a moral act. They invite skepticism, make results visible, and allow mistakes to teach. It’s how human judgment and machine intelligence grow together.

Designers as Translators Between Intention and Code

In my interview with Nick, he put it bluntly: “Designers now write the rules for algorithms.”

This is the new frontier. When designers collaborate deeply with engineers, they ensure ethical intent survives translation. They shape not just the interface, but the intelligence.

That takes leadership, courage, and taste. Kevin Flores calls it “comfort in ambiguity.” Gregory Stock calls it “taste earned through deep listening.” Either way, this is what allows designers to separate signal from noise — to decide not just what can be built, but what should be.

The Climate Lens

And then there’s the planet…

As Marc O’Brien argues in Designing for the Climate as a Creative Act, sustainability must be the standard rather than a specialization or add-on feature. AI systems consume massive energy. Ethical design has to consider when not to automate, not to scale, not to deploy.

Designers are uniquely positioned to bring environmental awareness into the earliest technical decisions — from data center selection to model size to energy offsets. The same empathy we bring to users must extend to ecosystems.

The Human-Centered Future

Ethical AI adoption has less to do with frameworks and more to do with mindsets, incentives, and courage.

Fear, indifference, and outdated success metrics are human problems — and they demand human-centered solutions. We designers and design leaders are the ones best equipped to build those bridges. We translate emotion into insight, values into criteria, and curiosity into systems that scale trust.

Our job isn’t to make machines more human. It’s to make humans more confident in the machines they choose to build.

Because the future of AI won’t be defined by who has the most data…but by who earns the most trust.

Subscribe to Design Shift for more conversations that help creative professionals grow into strategic leaders.

The Gold standard for AI news

AI will eliminate 300 million jobs in the next 5 years.

Yours doesn't have to be one of them.

Here's how to future-proof your career:

Join the Superhuman AI newsletter - read by 1M+ professionals

Learn AI skills in 3 mins a day

Become the AI expert on your team

What did you think of this week's issue?